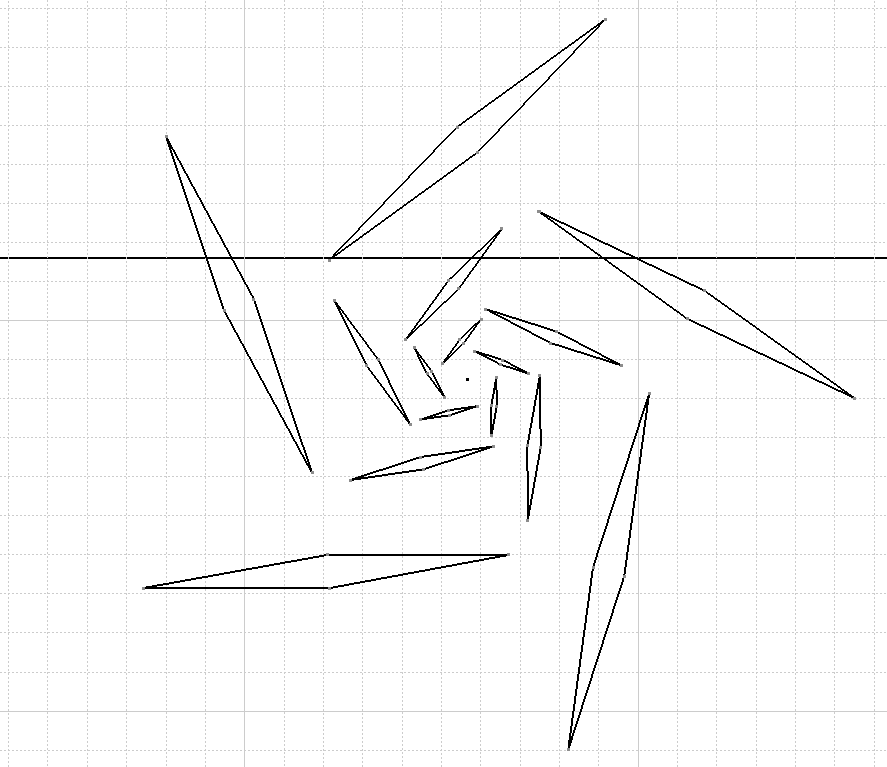

Laser cutting paper can be a mix of a science and art-form. The paper manufacturer, glossiness, color, and weight all come into play to make a successful cut. I’ve done some experimenting with a few different types of paper, basic printer paper to card stock.

Finding a good paper is key, I went through a number of different manufacturers/types till I found one that worked well. I found the darker colored papers were best (and in this case, color didn’t matter), so I used those. The dark color hides soot marks and makes the paper absorb more laser light, getting a better cut. AstroBright Grocer Kraft is a good paper and color. These have been seen to work better than generic white card stock. The white card stock had too much of a sheen to it, the laser could cut it, but it took slower passes or multiple passes to do so, while sooting far more than the AstroBright paper.

Next up is a good lens, I found that the G8 lens is better than the stock ortur laser master 2 lens for paper cutting. Using the G8, I’m able to cut paper in fewer passes with less scorching and a smaller focus size. Using this lens may even work quickly through the bright whites that the stock lens couldn’t handle (more experimentation is needed).

Holding It Down

I’ve used a few different hold down methods, none, blue tape, and a vacuum table. While using nothing to hold down the paper, the paper would curl up during the cutting, causing the cuts to lose accuracy, it could also shift around from other forces in the environment (air conditioning) which caused issues.

Blue tape worked okay but using it meant the edges of the paper weren’t cut completely through and everything had to be gently removed to prevent damage to the paper. I could cut through the edges completely with slower speeds or more passes, but this meant more scorching of the rest of the paper that didn’t need the extra power and this was undesirable.

The final stop was using a vacuum table to control the fumes and hold the paper down, this works the best as it holds the paper so it doesn’t bend upwards during cutting while also pulling the fumes to prevent as much sooting. This can also prevent scorching by reducing flareups during cutting.

Conclusion

Paper cutting on the laser master isn’t bad once you have everything tuned, running the G8 lens, darker card stock, and a vacuum table if possible. This all comes together to provide the quickest and cleanest cuts.