While attempting to debug a problematic ESP32 recently, I needed to connect to it via the COM port from my PC in order to get more logging from the unit. In attempting to do this, I ran into a very peculiar interaction. I couldn’t connect to the ESP COM port while CURA was open. I guess it was attempting to connect to a 3d printer on the port? Whatever the cause, it was a rather annoying encounter. Once I closed it out, I was able to connect and get the logging I needed.

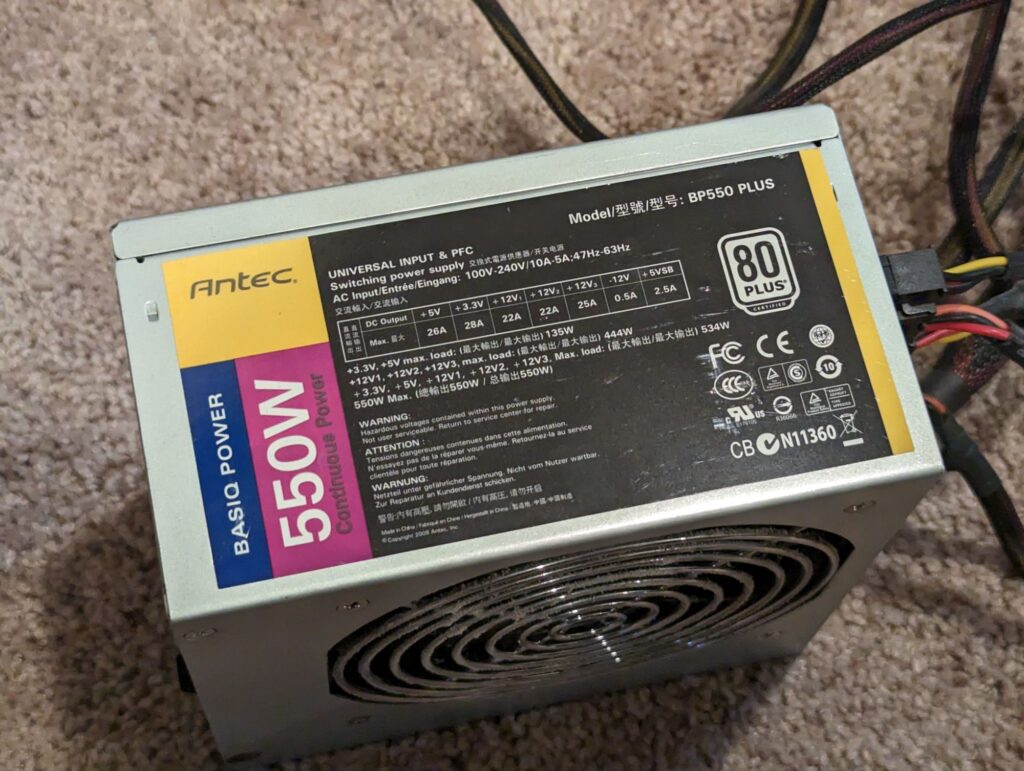

Ode to a PSU

The PSU that could, the antec basiq power 550. It ran for 11 years, 24/7 in my NAS, and a few years before that in an old desktop. For a $55 PSU on sale from NewEgg, I’d call that pretty good.

Aug 2011 – Nov 2024

InkScape Printing

In making the labels for my NFC music tags, I ran into a fun bug when printing from InkScape. Sometimes it doesn’t print your entire image. In my case, it printed all the outline boxes for the labels but NONE of the actual images for the labels. I found out from a forum post that this is a common problem for more complex images.

The way to get around this is to”Save a Copy” of your document as a PDF format, open that PDF in your favorite PDF viewer and print that out. Ensure in the print dialog that the PDF isn’t being scaled as well, as the application might try to be “helpful” in that way (which is entirely unhelpful when you need it to be a specific size). Don’t print the InkScape drawing as a PDF since that runs into the same print bug, it needs to be save as to the format for everything to work.

I use Edge to open and print PDF documents, so I make sure “actual size” is selected rather than “fit to printable area” which will shrink the document that already fits just fine on a sheet of paper.

Oddity in ESPHome

I ran into an oddity with the latest NFC Deck that I built. When starting up, it would take an hour for home assistant to connect and for me to be able to get logs out of it over WiFi with the ESPHome addon. The ESP I used was no different from the first NFC Deck that I built, and the vast majority of the configuration was the same too.

There were however a few things that were different this time based on how the ESPHome web installer and home assistant adoption process went. The big one I found was the web_server was enabled on the new one. This lets you access a little web page to see everything onboard and interact with it. I guess it just couldn’t handle the number of components and sensors onboard the NFC Deck ( 9 buttons, 27 templates, 9 lights, NFC reader, piezo buzzer, rotary), because removing that from the configuration and uploading a new firmware fixed it.

TlDr: If you have an ESP that takes FOREVER for home assistant to connect to, check to see if the web_server component is included in the build, if it is, try removing it and see if that fixes everything.

Unraid api causing the webui to fail to load

So recently my unraid webui has been running slowly, sometimes not showing the list of drives for a minute or two, or not at all. This finally culminated in an http 500 internal server error when I tried to load the main page. This isn’t good, and I was still able to access the server over ssh and via smb/nfs, so it wasn’t completely dead.

Time to debug over ssh. I first went to top and saw that shfs was at 100%+ CPU usage. This felt like an odd program to be using more than one CPU core of performance. I then went to the syslogs and saw lots of failed login attempts from a specific IP address. This wasn’t one I recognized, but I thought it might be a container I’m running (unraid api specifically).

I then went and ran docker ps to get the list of containers, and more importantly, the name for my unraid API container. I then ran docker inspect on that container and found it had the IP in question. Finally I went and ran docker kill on the container and the unraid webui started responding again. I guess I had misconfigured the container so it was falling to login, and retrying aggressively enough to stall out the webui.

Note to self, apps that scrape the webui should be configured correctly or else they may have some unintended consequences.

The VLANs Strike Back

I recently re-did my entire network, changing out switch locations, what ports were plugged into where, and so on. This wasn’t too bad, except I didn’t make note of one thing: which ports were VLAN tagged. This became quite the struggle as I tried to figure out why my WiFi couldn’t access the internet, and why all my servers were inaccessible. I even changed what port the Unifi AP was plugged into to try and kick that VLAN possibility out of the running. Little did I know that BOTH ports I tried to plug the AP into were tagged, so neither could reach the internet. I ended up plugging my RT-N16 back into the modem to see that I had internet, and then moved the switch port the access point was into one more time to find a port that wasn’t tagged. Once I had that, I swapped what port my servers were plugged into to find a non-tagged port, that would get my Unifi controller back up so I could go and clean up the rest and get things back into an operational state.

TLDR: Label or remove VLANs before re-architecting things

Amazon Fire Tablet Rant

I wouldn’t normally go into this, but boy does this product tell me that I’m the product being sold here, not the tablet. Starting with purchasing the tablet, Amazon asked if I wanted my account preloaded and I am glad I said no, or else they would’ve probably subscribed me to every fee possible.

When going through the initial setup, there were multiple pages of “do you want to sign up for this monthly service for $xx/month”, each of which moved the accept and deny buttons, often swapping them from the previous page, so don’t just click quickly through or you’ll have a new monthly bill.

On top of that there was the same situation with permissions, do you want maps to be able to use your permission (oh and allow Amazon to track your permission too, can’t do one and not the other). This really liked like they saw the windows 10 and Google privacy settings and said “we can do worse, much worse”.

One final insult Amazon gave us through this tablet is how it handles its lock screen ads, when the tablet is asleep. It will wake up the screen to play ads, this is just…. one great big slap to the face. Pros: cheap, Cons: Software, now to work on rooting and installing lineage OS, if its possible.

Proxmox VM Misconfiguration Downtime

I’ve got a VM running a critical service on my Proxmox host. This VM doesn’t have any real data to speak of, it just runs a few automated tasks that we rely on, so the virtual disk is rather small for it. For some reason while working on the VM (likely restoring a backup from a previous problem I had), I must’ve restored the VM and made my NAS mount the location of the virtual disk. So my virtual machine has been running off my NAS for a while. Until one day, when I had to bring my NAS down for an extended period, and the service went down too. I spent a bit of time trying to get it back up before realizing that the disk was located on the NAS and the NAS wouldn’t be up for a while. Nevertheless, once the NAS was back up, I moved the disk back to the virtual host where all the other VMs run from… oops.

Recent Hardware Failures

I’ve recently run into a few hardware failures in my servers and network so I figured I’d write up my debugging process and resolutions for all of them. The faults include a switch wall adapter, motherboard network interface, and a NAS data disk.

Continue reading “Recent Hardware Failures”The Side Effects of RAM Issues

I have been fighting failing parity checks for a few months now on my unraid server. I looked into each disk, checked smart stats and even thought I had found the culprit hard drive that was causing the issues. I still had it in my array but with no data on it just in case. This all happened just before another set of problems arose. The VMs on my server started acting up, crashing, and eventually when logging into one VM, everything crashed due to memory problems. I ran memtest and discovered that one of my RAM sticks was at issue, and from there determined that it simply wasn’t seated properly. After reseating the RAM, everything started working properly again. Parity checks come back clean, no more kernel panics, and the VMs are running stably. One partially unseated RAM stick caused all those issues.