Having been running short on time to maintain my servers, I decided to look into some automation on that front. I came across Ansible, which allows management of multiple servers configuration and installation using some of the basic software that’s pre-installed: python and SSH.

Setting up ansible is the easy part. This can be done by simply setting up the Ansible host with SSH key based access to all machines that it will be managing. I set it up with root access to those machines so that it could do mass updates without problem or requesting dozens of passwords and because I don’t have Kerberos or a domain based login system.

Prerequisites on the systems:

- Installed software before using ansible on a system

- python (2.x)

- python-apt (on debian based systems)

- aptitude (on debian based systems)

Installing Ansible on the host machine was as easy as yum install ansible, though for a more recent version, one can install it from the GIT repository. This is covered in many other locations so it won’t be covered here.

Run the following commands on the machines that will be managed by Ansible:

>sudo su

>mkdir ~/.ssh

>touch ~/.ssh/authorized_keys

>chmod 700 ~/.ssh

>chmod 700 /home/administrator/.ssh

These commands create the .ssh directory if it doesn’t already exist, and put the authorized_keys file in it. They then lock down the .ssh directory as is needed for the SSH server to trust the files within haven’t been compromised. If the directory were left with the default read permissions to group and all, the SSH server wouldn’t let us log in using the SSH keys.

Run the commands on the ansible host:

>ssh-keygen -t rsa

>ssh-copy-id ssh administrator@192.168.1.23

These commands generate a public key / private key pair to use with SSH key based logins to the systems. This then copies the public key over to the machine we will be managing.

Run the following command as root on the machines that will be managed by Ansible:

>cp /home/administrator/.ssh/authorized_keys ~/.ssh/authorized_keys

This command copies the public key from the local user to the root user, allowing Ansible to login as root and manage the machines.

Once SSH key based logins are enabled on all the machines, they will need to be added to the Ansible hosts file (/etc/ansible/hosts). This file tells Ansible the IP addresses of all the machines it should be managing. This is a basic text file and can be easily modified with nano. Add a group (header of “[Group-Name]”) to the file with your hosts underneath it, and example is shown below.

>[rapternet]

>shodan ansible_ssh_host=192.168.2.1

>pihole ansible_ssh_host=192.168.2.2

>webserv ansible_ssh_host=192.168.2.3

>matrapter ansible_ssh_host=192.168.2.4

>unifi ansible_ssh_host=192.168.2.5

This adds the rapternet group with shodan, pihole, webserv, matrapter, and unifi servers in it.

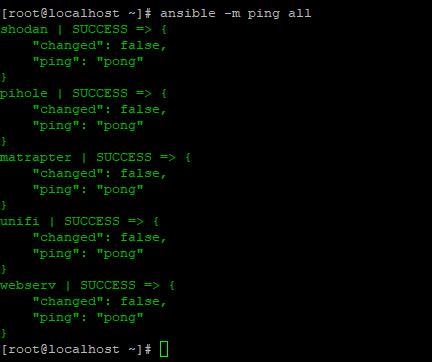

One easy way to test the ansible setup is to ping all the machines:

>ansible –m ping all

This tests their basic setup with ansible (requirement of python 2.x on each machine, ssh connection functioning).

As can be seen, all servers are outputting that nothing has changed (which is to be expected with a simple ping) and that the pong response was sent back to the host. This is a successful test of the Ansible setup.

References: