My NAS has been getting old, and it’s been getting more and more noticeable over time. Considering that I built it in 2013, I’d say it’s had a good 10+ year lifespan and could use a solid upgrade. I plan on reusing a fair bit of hardware, but I also plan on moving to ZFS for my primary storage, so I’ll be getting new drives to use in a new ZFS backed array.

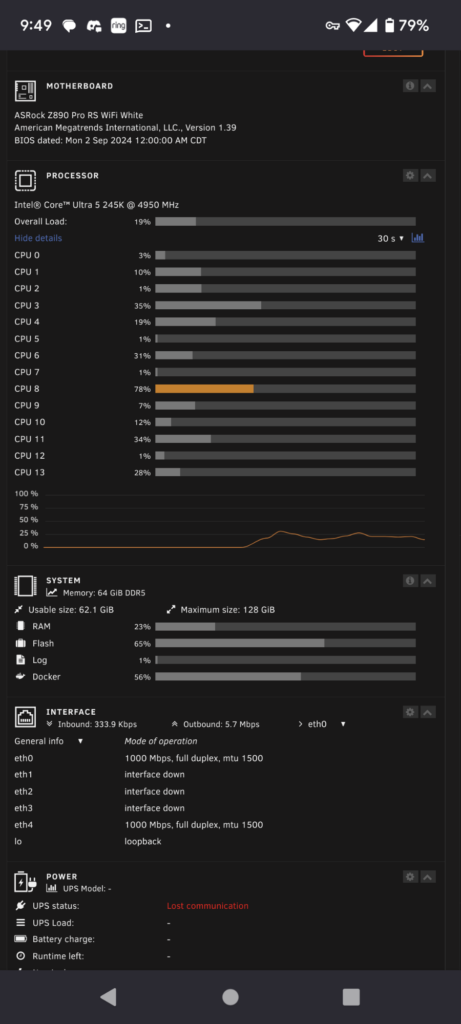

The heart of the machine sorely needs updating. It is currently a quad core E3 that hits 100% usage pretty quickly especially while doing anything with media. Since QuickSync is still pretty hard to beat, I plan on using an Intel CPU (the latest have hardware AV1 encoders and decoders which will be useful), and a bit of a RAM upgrade (which should be helpful when running ZFS).

Hardware

- New components

- Core Ultra 5 245k

- Noctua NH-U12a CPU cooler

- 64GB RAM

- ASRock Motherboard

- 2x 2tb SSD

- 4x 18tb WD Red HDD

- From Existing Build

- 750W PSU (recent addition)

- Some other random drives from previous build

- Fractal design define XL R2

- LSI HBA

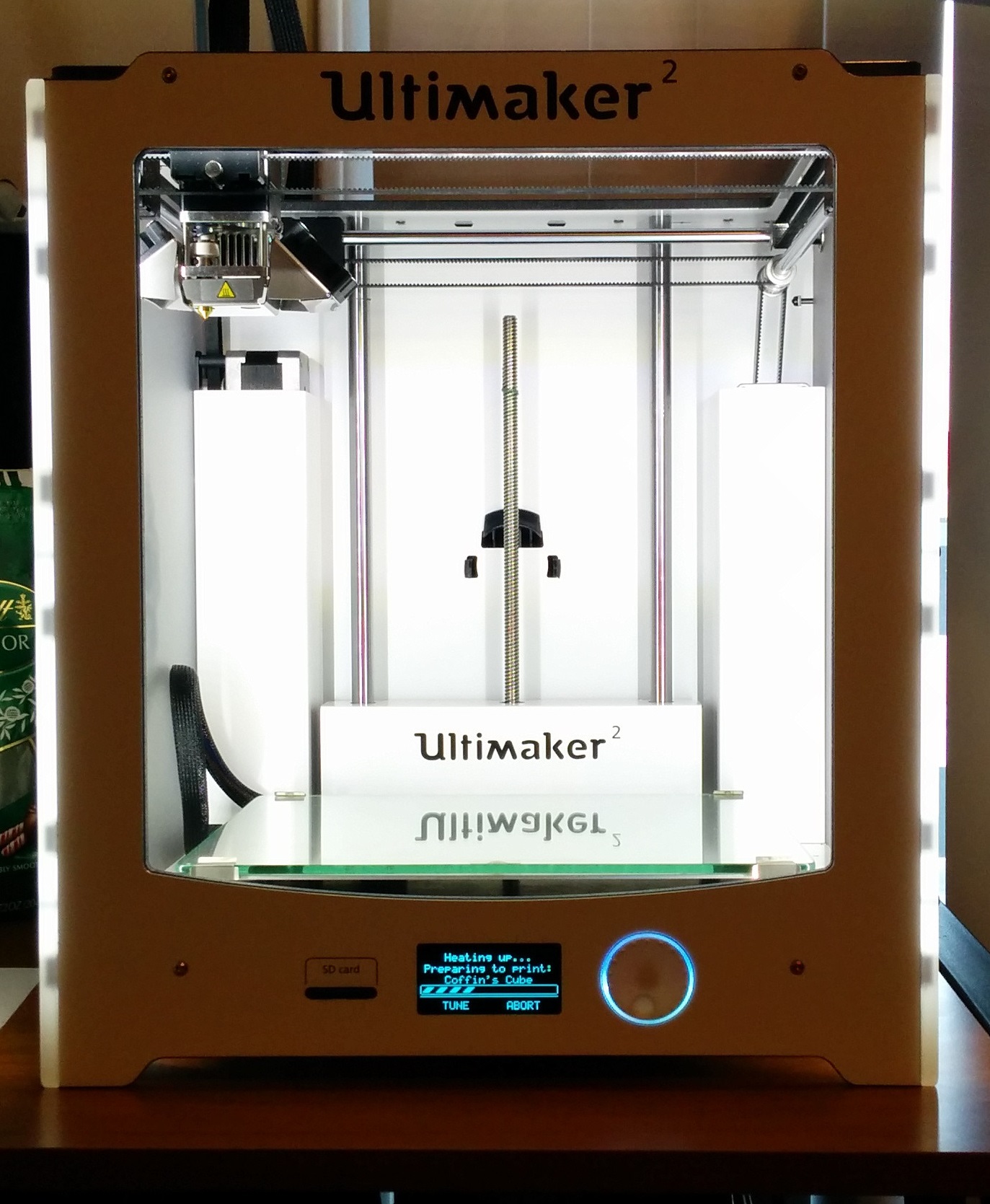

Build

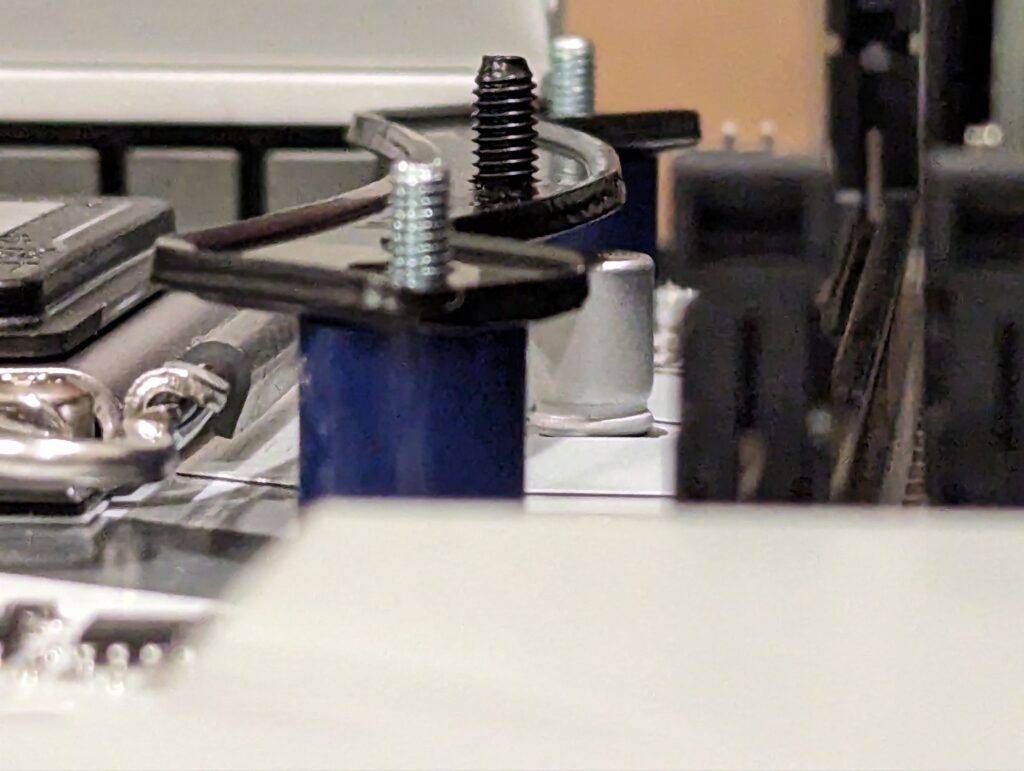

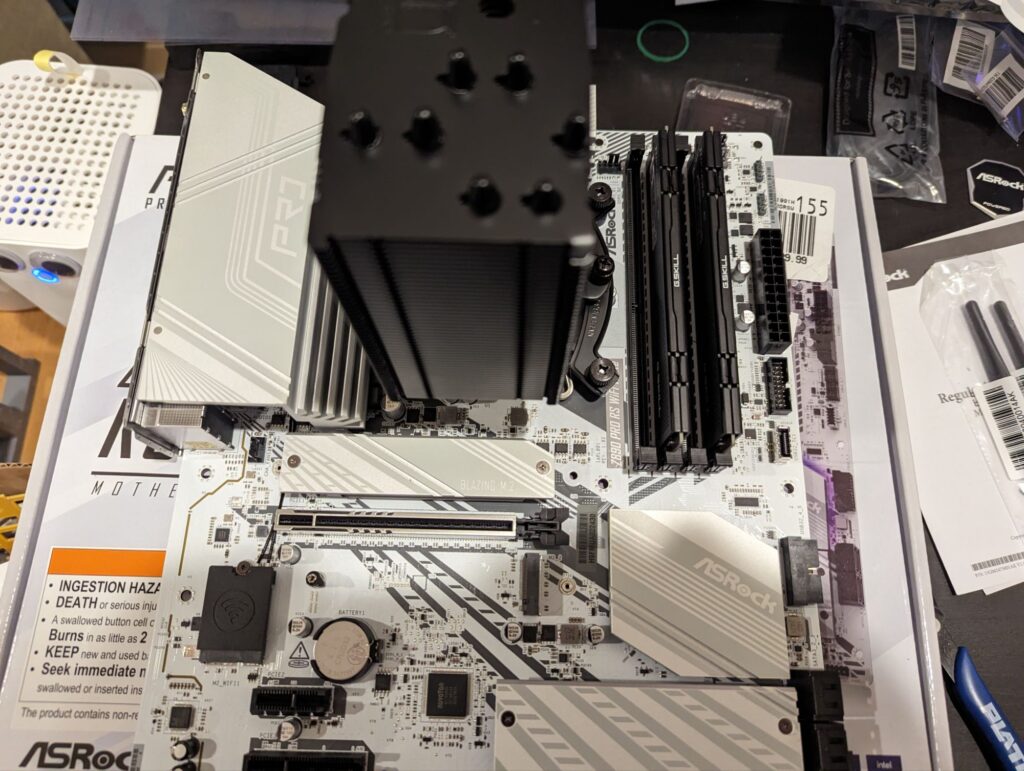

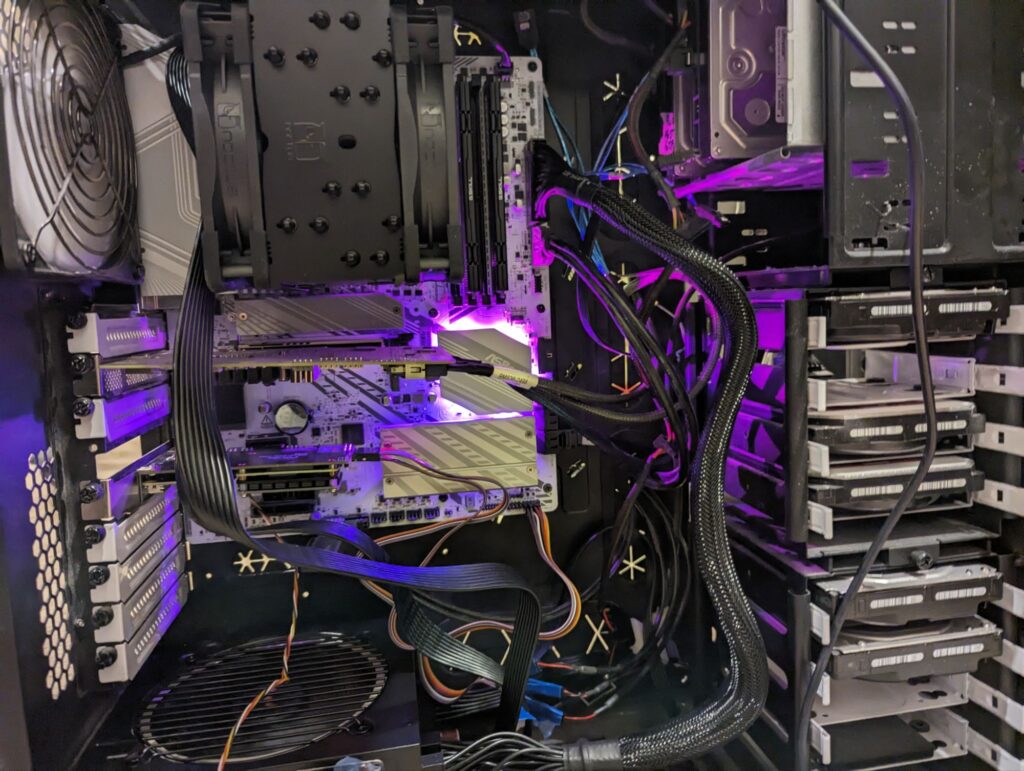

I was a bit nervous when I started mounting the CPU cooler to the motherboard. I noticed the VRMs were pretty close to the socket and in the area that the cooler bracket was going to go over. I carefully lined things up and before bolting it all down, I double checked the clearance. It all cleared, but it is certainly tight.

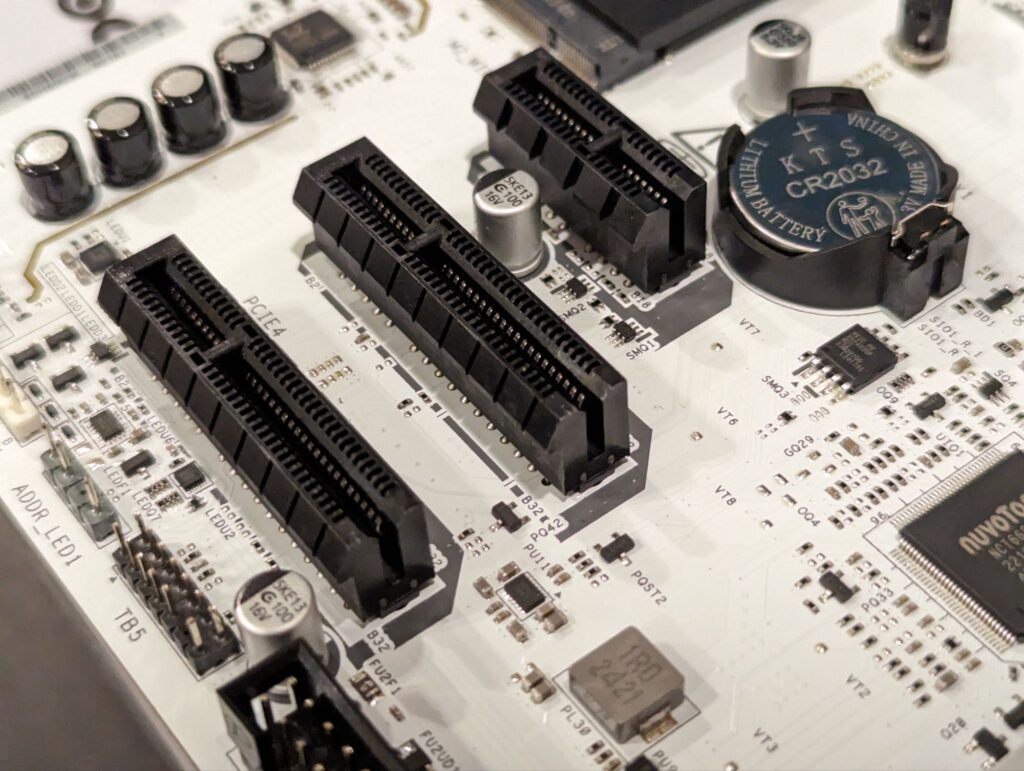

I however was happily surprised at the slotted PCIE slots. This means I don’t have to worry about card length when installing PCIE cards (as I will probably end up with additional networking at some point in this build).

I had to move around my motherboard mount points in my case to match the new motherboard. The old one was MATX and this one is ATX, so I also had to add a few too.

Sadly my PSU cables aren’t long enough to run behind the motherboard tray, so I’m stuck with those out front really bungling up the look (that will never be seen unless I’m working on it but it’s the principal of the matter).

Software

UEFI

As my default when setting up a new motherboard goes, I turned off secure boot, and ensured virtualization support was enabled. I’m not sure if there’s a setting to power on when power is provided (been unable to find it), but I want to get an IP KVM (like a JetKVM or something similar) for that control.

OS

I went into the flash drive and manually upgraded to the latest version of unraid. In hindsight, I probably should’ve done this before the hardware upgrade.

I then went to the version zip file I used for the upgrade and copied over the EFI folder. My flash drive had a EFI- folder which disabled efi boot. My new motherboard didn’t have legacy boot options to use, so this was the only way to go.

Network

On my old server, the motherboard NIC had died so I disabled it in the OS, which meant when I transferred my config, the motherboard NIC on my new motherboard was disabled. I had to reinstall my 4 port NIC and plug into that to get unraid webui up. I disabled the static IP reservations in unifi while I was at it at this point too. Unraid only lets you apply one network configuration change at a time, and I had a few to do.

- Enabled the motherboard NIC

- Removed the static IP from the network card NIC

- Navigated to the IP on the new motherboard NIC to get back into the Unraid UI

- Add the static IP to the motherboard NIC

- Go to the old IP again.

Once that was all done, I went into unifi and applied the static IP reservation to the new NIC.

Unraid Apps

Plex wouldn’t start after I got all the networking squared away. Digging into it I managed to find the root cause, the Intel igpu passthrough I was using was broken. Removing it cleared up the issue and got things working again (though now I have to figure out what is needed to get it to work again).

Oddities

The LSI HBA no longer shows it’s BIOS screen or boot up options before the motherboard UEFI flash shows. Previously the LSI HBA would list out the drives and allow you to hit a keyboard key to jump into it’s configuration during boot up. The network card I have plugged in used to do the same for each port on board (added a fair length to the boot times) but it no longer does as well. I think this is related to the legacy boot support vs UEFI boot support for those devices. Since I never really made use of those features, I’m not worried about losing them.

My boot up should also be shorter now that the motherboard NIC is functional. Unraid would get hung up on that every boot on my old motherboard and it added a few minutes to the boot times as it waited to time out before it finally gave up on the NIC that was disabled in the GUI.

The network adapter onboard supports 2.5g but my switches do not… so whenever I need new switching, I’ll be keeping a look out on multi-gig capabilities (the wiring in the wall should at least support 5g).

New Drives

As a part of the upgrade, I plan on replacing my array with ZFS to help avoid in place file corruption. I have a number of new drives to start building out that array. They’re 18TB WD Red Pro drives. I plan on pre-clearing them to make sure everything is in order, and then creating a raid z1 pool with them. I also plan on creating a mirror pool of two new ssds for my cache drive that’ll continue holding my docker app data.

BliKvm Flop

My attempt to use the BliKvm PCIE was an utter flop, it wouldn’t boot, get network, or do anything really other than blink some LEDs. If I plugged it into my switch’s POE port it would even crash the switch and prevent it from starting (literally entire switch went dark when I plugged it in to POE despite its advertised “support”). I gave up on that and will get a nanokvm or JetKVM instead.

Future Changes

The new power supplies cables don’t quite make it when running behind the motherboard tray. Due to this I end up with some pretty sloppy cable gore, which it’s a closed case so it’s not the worst, but it still makes maintenance harder. I’d like to get some custom length CableMod cables (which are available for my PSU) and replace some of them so that I can get better routing. I could even do this with some of the HDD cables which are not at all optimal for lengths. Overall though I’m happy with the build, its all running well, plenty of hard drive space, plenty of performance.