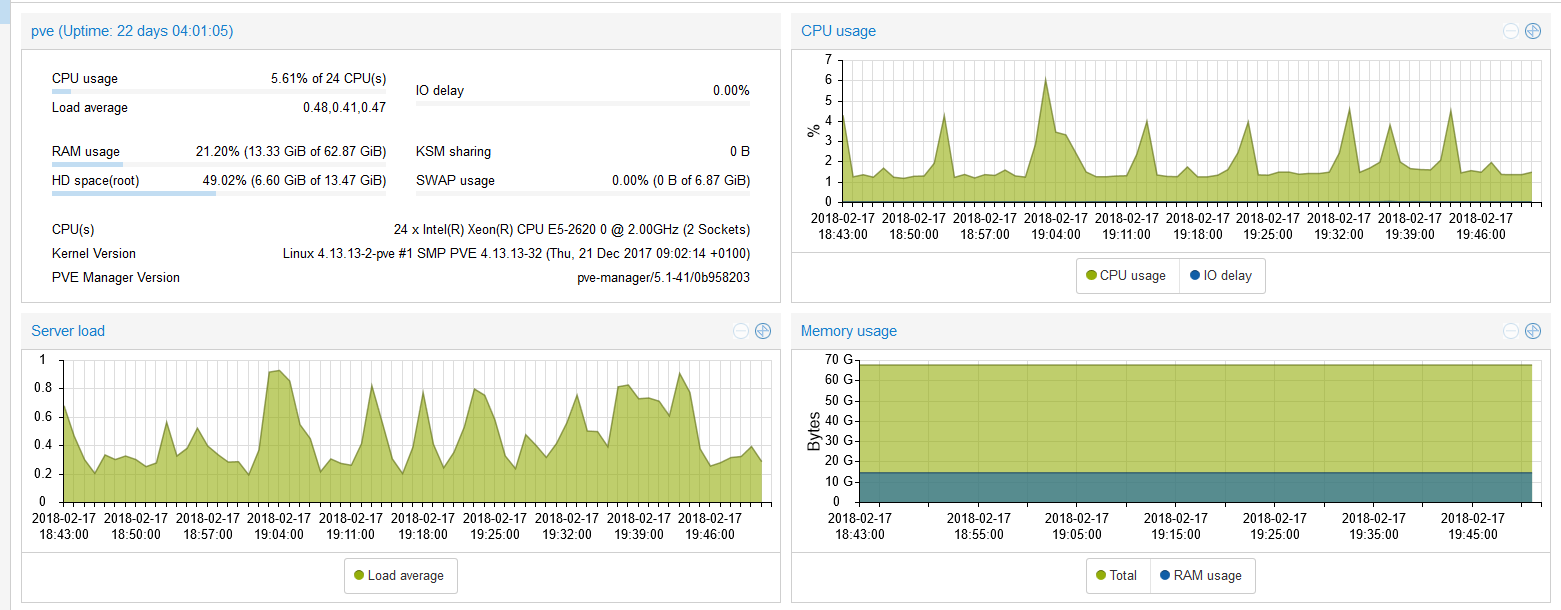

I started looking at servers for a new vhost for a few months, trying to determine what I would need, and what I could utilize. I was running out of RAM in my NAS to run virtual machines, and I was running into odd times when I wanted to reboot my NAS for updates, but didn’t want to reboot my entire lab. So I came to the decision to build a new server that would host the virtual machines that were less reliant on the NAS (IRC bots, websites, wiki pages, etc) as well as other more RAM intensive systems. The new server was to have a large amount of RAM (>32GB maximum), which would give me plenty of room to play. I plan on leaving some services running on the NAS which has plenty of resources to handle a few extra duties like plex, and backups. The new VHost was also to be all flash, the NAS uses a WD black drive as the virtual machine host drive, which ends up slowing down when running updates on multiple machines. I may also in the future upgrade the NAS to a SSD for its cache drive and VM drive.

Here is what I purchased:

| System | Dell T5500 |

| CPU | 2x Intel Xeon E5-2620 |

| PSU | Dell 875W |

| RAM | 64GB ECC |

| SSD (OS) | Silicon Power 60GB |

| SSD (VM) | Samsung 850 EVO 500GB |

| NIC | Mellanox Connect-x2 10G SFP+ |

The tower workstation was chosen as it was a quiet system, supporting ECC ram and a fair bit of expandability. The 10G NIC is hooked up as a PTP link to the NAS. The motherboards 1G NIC is setup as as simple connection to the rest of my LAN. The VM SSD is formatted as EXT4 and added to proxmox as a folder. All VMs are going to use qcow2 type files, as they support thin provisioning and snapshots. I considered LVM-Thin type of storage, however this seemed to add complexity without any feature/performance gains.