I do small experiments from time to time to try out features or functionality before building them into a project (or project design even). In this case I wanted to try out the IR functionality in WLED for a project that may not have WiFi for access to the full fledged WLED feature set.

Continue reading “IR Remote with WLED”Getting SVG Data into CATIA

Another day, another type of data to import into CATIA. This time is the classic SVG format used by LightBurn and often edited with InkScape. This is pretty quick but I wanted a bunch of screenshots to make it easy to follow.

Continue reading “Getting SVG Data into CATIA”InkScape and LightBurn

Unable to Perform Path Operations

If you can’t do boolean operations in InkScape, you may have to un-group the paths. It can’t do path operations on groups, and sometimes InkScape will put a single object into a group. You can combine paths and do boolean operations on them that way, but that is using the path combine tool, you just cannot use the grouping feature.

LightBurn Limitations

You can’t use object operations on it for LightBurn to understand it. No use of object clip or mask should be used when creating files for use in LightBurn. You can make use of path difference or intersection to make changes to similar effect when avoiding the object tools.

Cleaning up the docker registry

Working on my docker swarm recently I noticed that my storage was running low. After investigation, I found I had 17GB of usage by my docker registry. I have no need to have that many versions of my custom built containers on hand, so I went through the process of cleaning it up.

Continue reading “Cleaning up the docker registry”Home Assistant Picture-Elements Card

I’ve seen the picture elements cards in home assistant dashboard screenshots from time to time and was always interested into how they did it. Digging into it, as with most things home assistant, it’s pretty easy to do, and of course can be as complex as you see fit. Here I’ll be setting up a simple picture elements card for my air quality sensors.

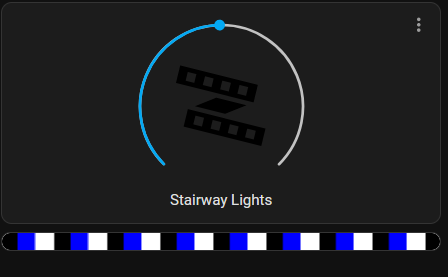

Continue reading “Home Assistant Picture-Elements Card”Adding WLED Previews to Home Assistant Dashboards

I found out recently about the webpage card in home assistant and how it can be used to add a WLED live view to your home assistant dashboard. Not only that, but its pretty simple to do as well!

Continue reading “Adding WLED Previews to Home Assistant Dashboards”Converting STL to STEP

Catia is my CAD of choice for most things, mostly because it’s what I know best. It however doesn’t have great support for STL files. We’ll be using FreeCAD to convert them to STEP files which Catia supports well. First we want to open our STL file with FreeCAD.

Continue reading “Converting STL to STEP”Updating my IOT Devices

Keeping IOT devices up to date is a hard task, since they come in all sorts of different ecosystems, software projects, companies, etc. I run all FOSS IOT devices in order to avoid the problem with proprietary software and companies going out of business. In this, I’ll go over how I’m keeping my 3 types of devices up to date (ESPHome, WLED, and Tasmota).

Continue reading “Updating my IOT Devices”Renaming ESP Devices in ESPHome

I recently started trying out the ESPHome Add-On in home assistant. With this, I was able to add and upgrade some ESP devices, however I soon realized after adding one of them, that the auto-generated name may not be wanted (or if you’re moving a device around and want a new name). So I did some research and worked out the process for renaming the device in ESPHome.

Continue reading “Renaming ESP Devices in ESPHome”Joplin Failed to Fetch Due to Certificate Expiration

Recently I ran into an error when trying to update Joplin (hooked up to WebDAV on NextCloud).

Last error: FetchError: request to https://nextcloud.rapternet.us/remote.php/dav/files/sbachhub/Documents/Joplin/info.json failed, reason: certificate has expired

I checked the SSL cert with FireFox and everything looked good there. Finally I decided to try updating the application, on a wild attempt to fix it in a simple way, and… it worked. It might have just needed a reboot, but the update worked in this case.