I decided to reorganize my home assistant dashboards which culminated in adding a new dashboard and moving a number of views from other dashboards to it. What I didn’t realize was that this wasn’t as easy as going into the UI and hitting copy on the view, though it wasn’t hard to do in the end (basically one more step and then hitting copy).

Continue reading “Moving a View between two Home Assistant Dashboards”Updating the Webserver to Ubuntu 24.04

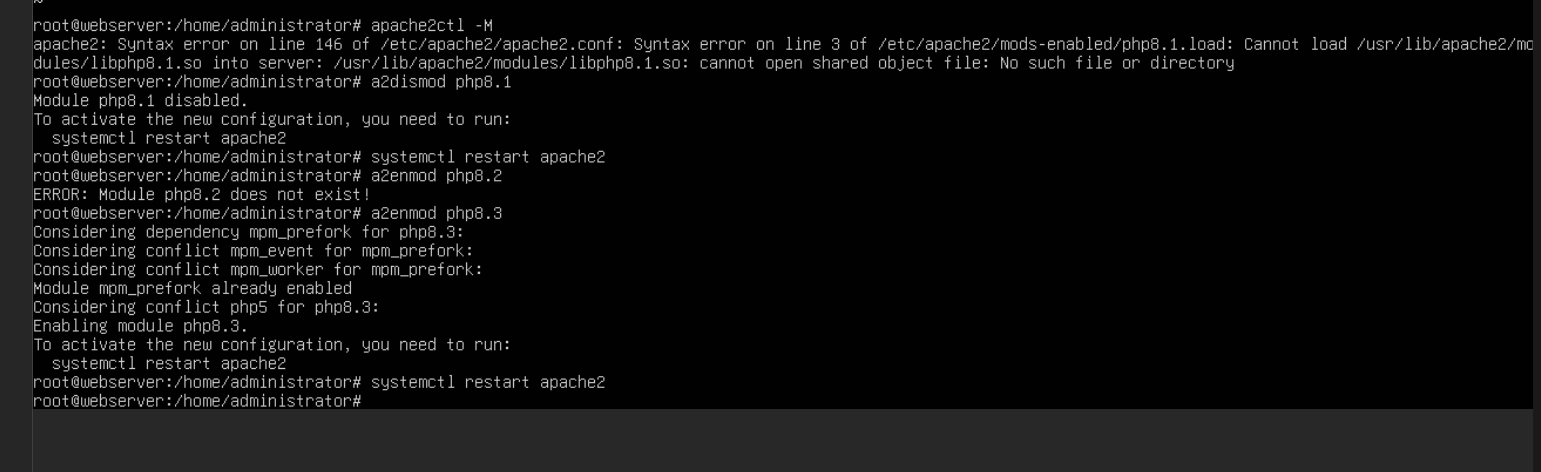

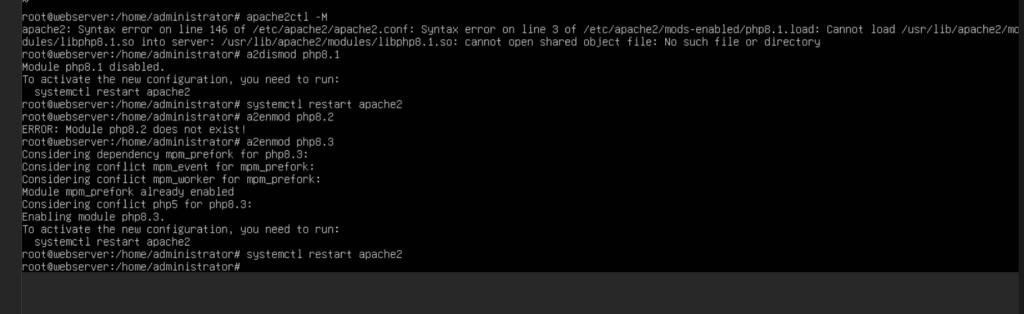

I upgraded my webserver to Ubuntu 24.04 LTS recently and ran into an odd little issue. Apache2 refused to start up due to a PHP plugin failing to load. This was specifically PHP 8.1 failing to load as it was replaced in the repos with 8.3. This ended up having a quick fix to get things in order.

All I needed to do was disable the old PHP mod and enable the new one. I used this reference for which versions would be supported in 24.04 to enable the correct version. Overall a nice quick fix and my webserver was back operational

> sudo a2dismod php8.1

> sudo systemctl restart apache2

> sudo a2enmod php8.3

> sudo systemctl restart apache2

Cura and ESP COM Ports

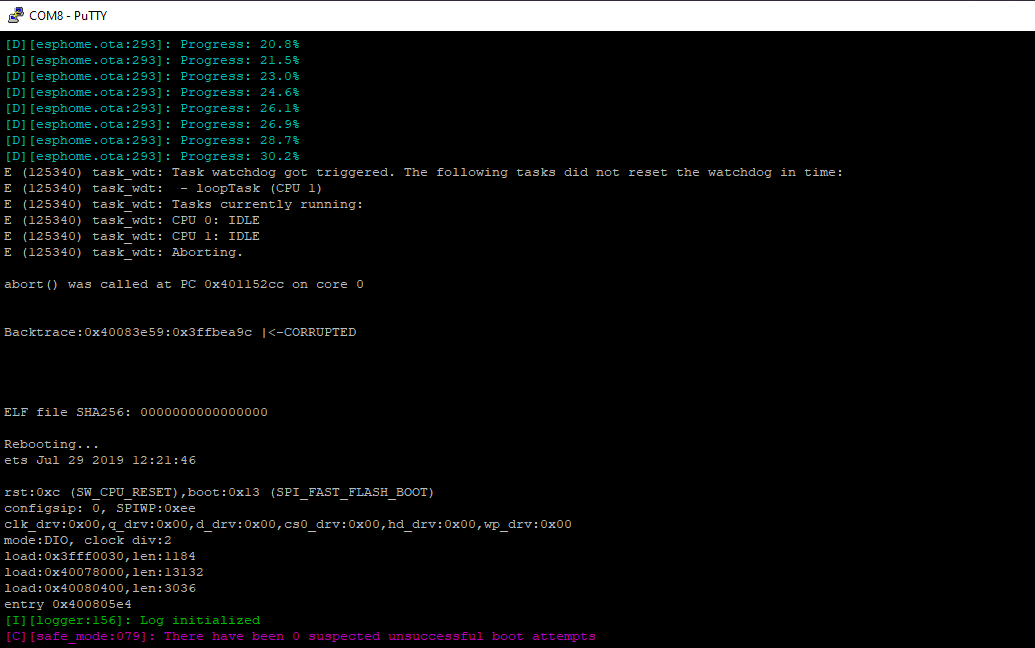

While attempting to debug a problematic ESP32 recently, I needed to connect to it via the COM port from my PC in order to get more logging from the unit. In attempting to do this, I ran into a very peculiar interaction. I couldn’t connect to the ESP COM port while CURA was open. I guess it was attempting to connect to a 3d printer on the port? Whatever the cause, it was a rather annoying encounter. Once I closed it out, I was able to connect and get the logging I needed.

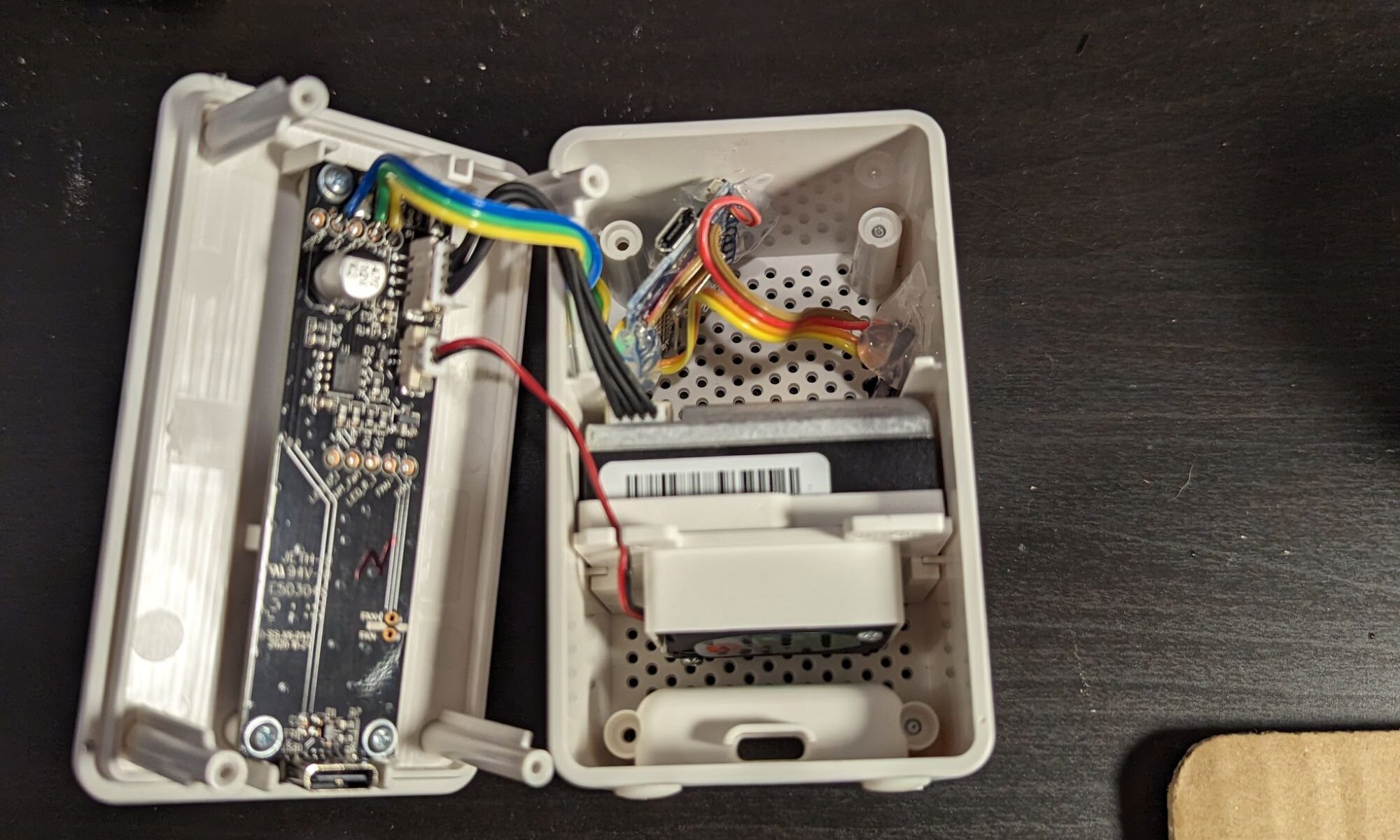

BME680 and ESP

I’ve been trying to add some BME680 sensors to my IKEA air quality sensors. My goal was to add a bit more environmental sensors around my house to keep track of temperature, humidity, etc. In doing this I also ran into some odd problems with my D1 minis to work through.

Continue reading “BME680 and ESP”Putty Serial Connections to ESP Devices

While working on debugging an ESP32, I needed to get the log for the unit when WiFi was down. To do this I need a serial connection, which can be done using putty. Since this was the first time I used putty to connect to a serial device, I figured I’d give a short how to connect to an ESP32 using it.

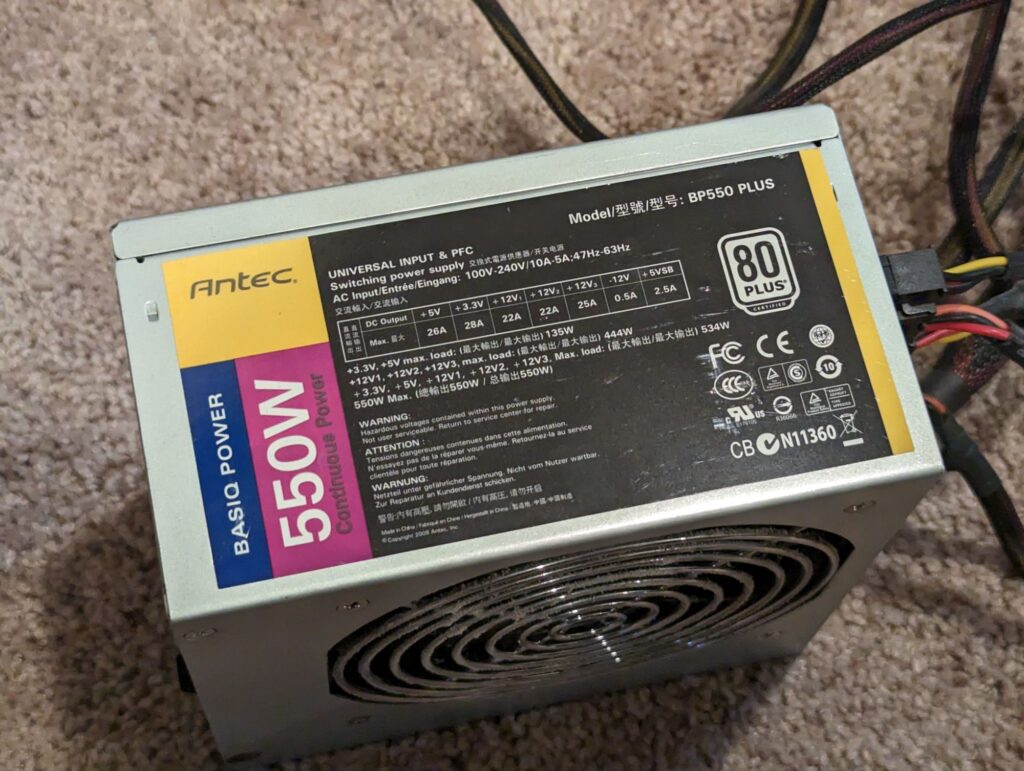

Continue reading “Putty Serial Connections to ESP Devices”Ode to a PSU

The PSU that could, the antec basiq power 550. It ran for 11 years, 24/7 in my NAS, and a few years before that in an old desktop. For a $55 PSU on sale from NewEgg, I’d call that pretty good.

Aug 2011 – Nov 2024

Nova launcher app switcher stops working

I’ve run into a problem on my phone where the app switcher stops working. This only seems to happen when using a third party app launcher like Nova launcher on a pixel phone. A solution to it is to go to the pixel launcher in the android app settings and force stop it. Even if the pixel launcher app is disabled, still click the force stop button. This clears up the issue and allows the app switcher to work again.

Bambu Lab X1C Poop Bin

I’ve printed a few multi color prints with my bambu printers and yet I still have no way to collect the filament poops other than grabbing them off the floor. How about I try to fix that.

Continue reading “Bambu Lab X1C Poop Bin”Finding Stuck Automations

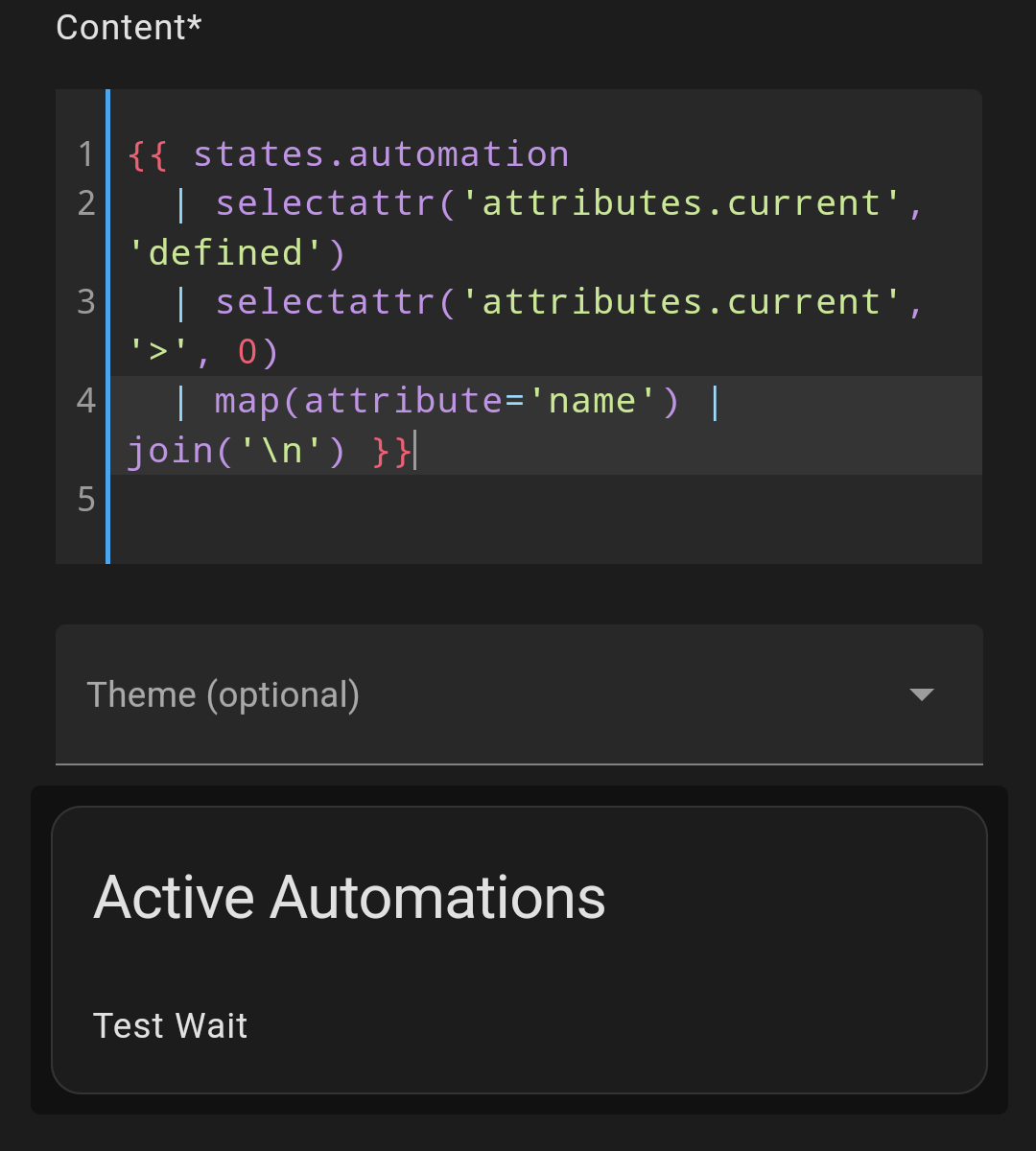

I run into a case from time to time when I’m developing new automations where they end up hanging and stop responding. Since most of my automations are set to only allow one to run, this ends up causing future calls to it to fail since the hung iteration is still out there.

Continue reading “Finding Stuck Automations”ESPDeck 3 + LED Backlighting

Ever since I integrated per key LEDs in the NFC Deck, I have wanted to update the ESP Deck design to incorporate them (and update some of my keypads to include them too). I finally got around to both of these, swapping the switches for clear switches on one of my ESP Decks and integrating in the LED lighting.

Continue reading “ESPDeck 3 + LED Backlighting”